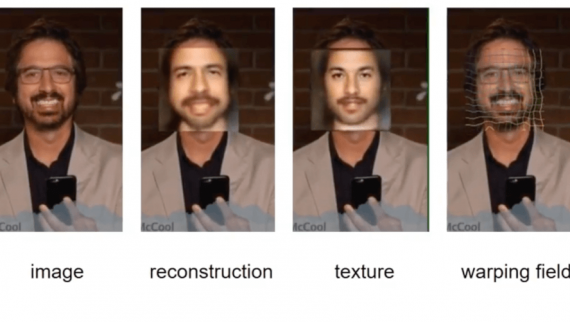

Deforming Autoencoders (DAEs) – Learning Disentangled Representations

21 September 2018

Deforming Autoencoders (DAEs) – Learning Disentangled Representations

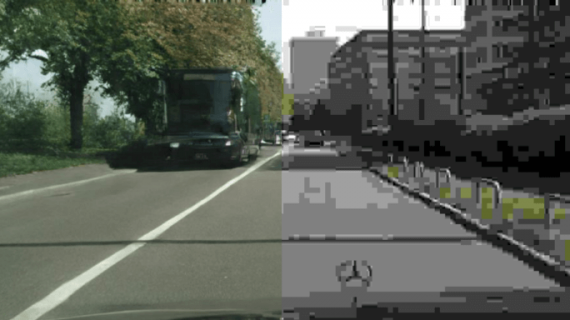

Generative Models are drawing a lot of attention within the Machine Learning research community. This kind of models has practical applications in different domains. Two of the most commonly used…