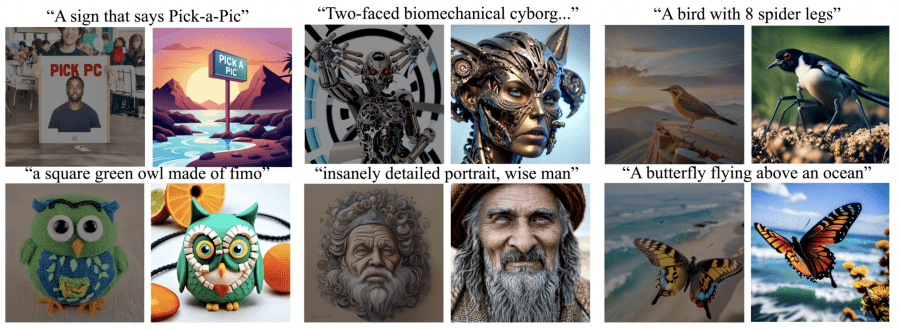

Stability AI, in collaboration with Tel Aviv University, has released the PickScore dataset, a collection of over 500,000 images accompanied by user ratings. They have also introduced the PickScore evaluation function, which surpasses human performance in predicting user preferences.

Evaluating Generated Images

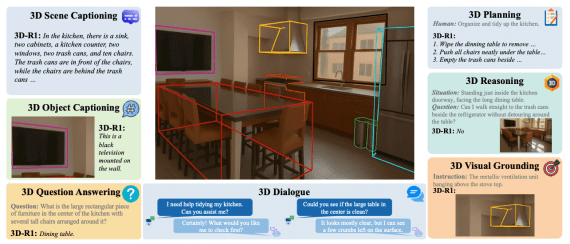

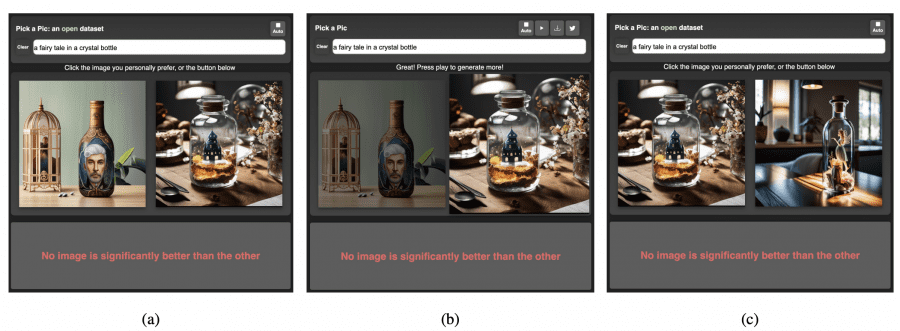

To create the dataset, researchers developed a web application based on StableDiffusion 1.5 and SDXL beta models, where users are presented with a pair of images generated from a textual prompt. Users then select their preferred option or indicate that there is no clear winner. Each example in the dataset contains the text prompt, two generated images, and a label indicating the preferred option.

The PickScore Evaluation Function

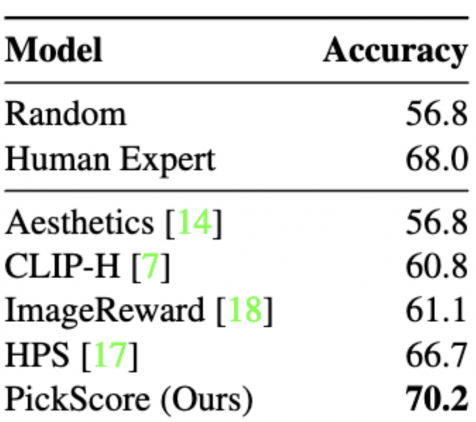

The dataset was used to train a function that evaluates the relevance of an image to the given text prompt. Researchers fine-tuned the CLIP-H model using a reward function similar to InstructGPT. This function maximizes the probability of selecting the preferred image over the non-preferred one and the probability of a tie. The results demonstrate that PickScore achieves a prediction accuracy of 70.2%, outperforming humans at 68.0%. State-of-the-art zero-shot CLIP-H and Aesthetics prediction methods perform close to chance level, with accuracies of 60.8% and 56.8% respectively.

Comparison with FID

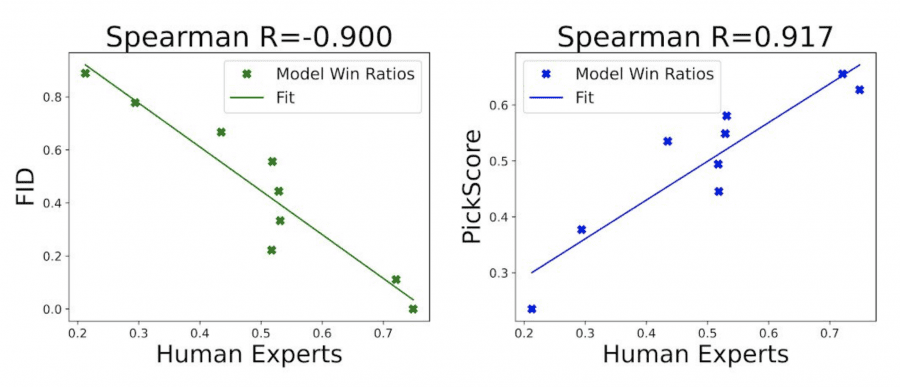

A comparison between PickScore and the established Fréchet Inception Distance (FID) metric, commonly used to evaluate generative models, reveals that PickScore demonstrates a strong positive correlation with user preferences (0.917), even when evaluated using MS-COCO captions. In contrast, FID yields a negative correlation (-0.900). PickScore exhibits a significantly stronger correlation with “expert” rankings, making it the most reliable scoring metric compared to existing benchmarks.

The dataset, consisting of over half a million examples, and the evaluation function are openly accessible. PickScore represents a cutting-edge evaluation function for images generated from textual prompts, displaying superior alignment with human judgments compared to other publicly available benchmarks.