Researchers from the Max Planck Institute, MIT, and Google have introduced DragGAN, an innovative approach that allows for seamless manipulation of images generated using Generative Adversarial Networks (GANs). By leveraging the power of DragGAN, users can effortlessly modify the pose, shape, facial expression, and composition of various objects, including animals, cars, humans, and natural phenomena. Let’s dive into the details of this groundbreaking method.

DragGAN comprises two key components:

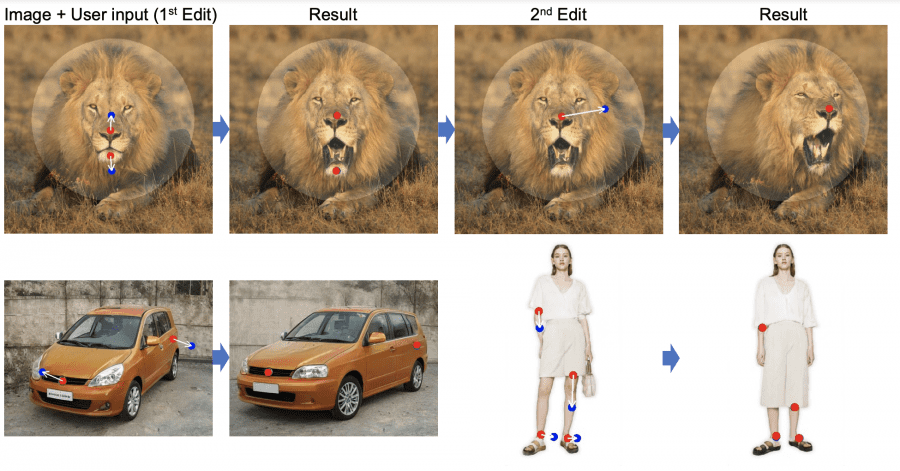

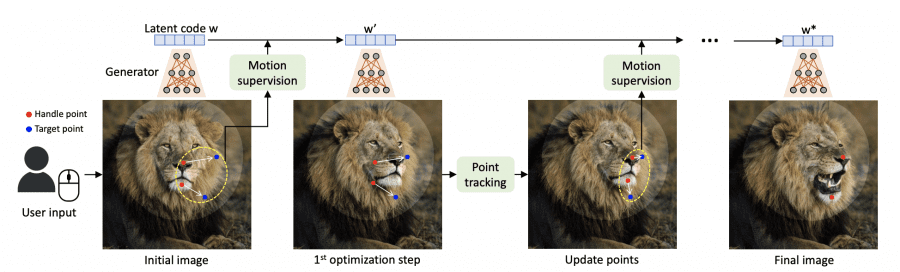

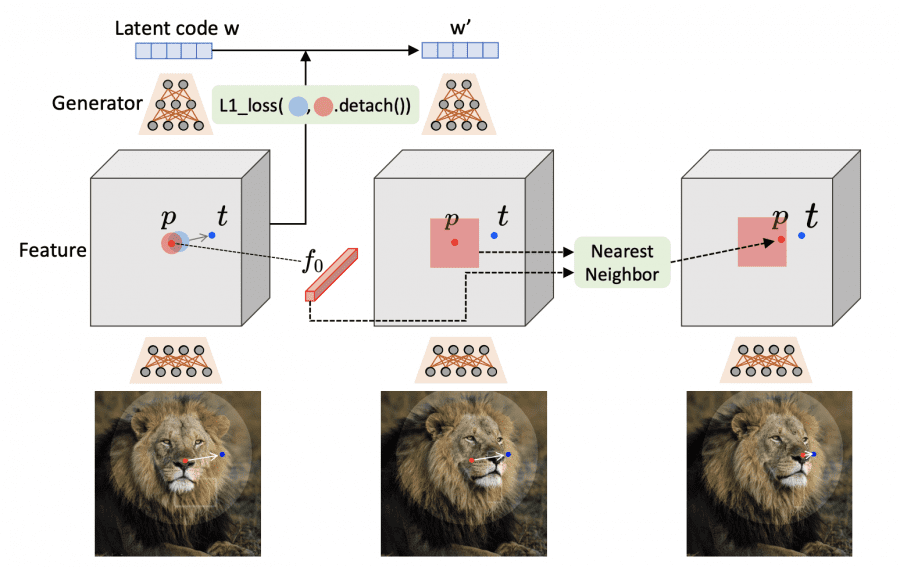

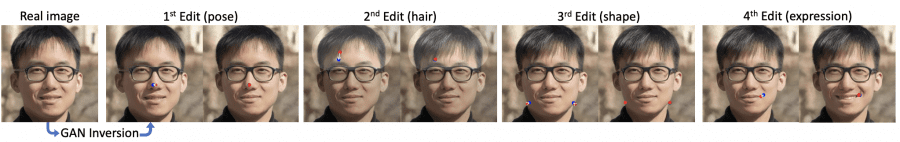

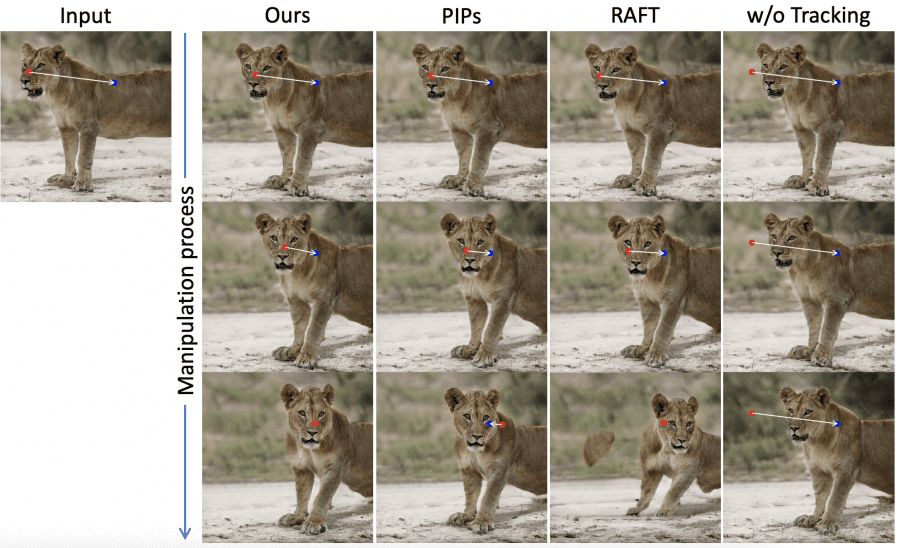

- Motion supervision: This component is based on a feature-based displacement model, which guides reference points (represented by red dots) towards their corresponding target positions (represented by blue dots).

- Point tracking: A novel approach to point tracking that utilizes discriminative GAN features to consistently locate the reference points.

Methodology in Detail

For an image I ∈ R^3×H×W generated by a GAN with latent code 𝒘, DragGAN enables users to specify reference points {𝒑𝑖 = (𝑥𝑝,𝑖, 𝑦𝑝,𝑖)|𝑖 = 1, 2, …, 𝑛} and their corresponding target points {𝒕𝑖 = (𝑥𝑡,𝑖, 𝑦𝑡,𝑖)|𝑖 = 1, 2, …, 𝑛} (where 𝒕𝑖 denotes the target point for 𝒑𝑖). The goal is to move the objects within the image in a way that aligns the semantic positions of the reference points (e.g., the nose and chin) with their respective target points. Additionally, users have the option to utilize a binary mask M, allowing them to selectively manipulate specific areas of the image.

DragGAN employs an iterative approach that combines motion control and point tracking. During the motion control stage, reference points (red dots) are progressively adjusted to move towards the target positions (blue dots). Simultaneously, in the point tracking stage, the reference points are continuously updated to accurately follow the objects in the image. This iterative process continues until the reference points successfully reach their corresponding target points.

The motion control stage leverages the distinctive features of intermediate generator feature maps, providing a simple and effective loss function. Specifically, the authors utilize feature maps F obtained after the 6th block of StyleGAN2, as these have consistently demonstrated superior performance compared to other features.

Point tracking is performed within the same feature space using a nearest neighbor method.

DragGAN Results

DragGAN produces highly realistic results, even in complex scenarios such as reconstructing hidden content and deforming object shapes to achieve desired flexibility. Through rigorous qualitative and quantitative evaluations, DragGAN has showcased its superiority over previous approaches in the domains of image manipulation and point tracking.

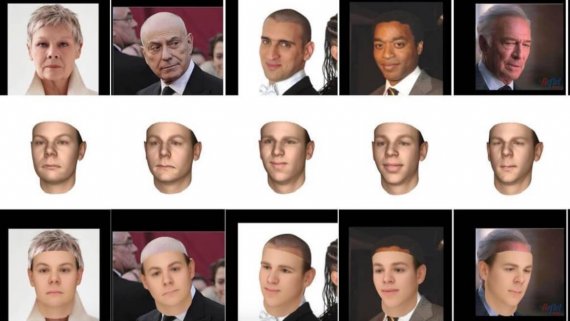

The research authors have successfully demonstrated DragGAN’s potential for manipulating real-world images. By applying GAN inversion to a real image, mapping it back to the latent space of StyleGAN, and subsequently editing its pose, hair, shape, and expression, DragGAN empowers users with advanced image manipulation capabilities.

Comparative analyses between DragGAN and other methods, such as RAFT, PIPs, and non-tracking techniques, demonstrate DragGAN’s superior performance in accurately tracking point displacements.

By achieving more precise point tracking, DragGAN enables finer and more accurate editing of images.

In conclusion, DragGAN introduces a revolutionary approach to image manipulation, providing users with unprecedented control over GAN-generated images. Its exceptional results and superior performance make DragGAN an invaluable tool for various applications in computer vision and creative industries.

Read also: Best AI Photo Generator Apps