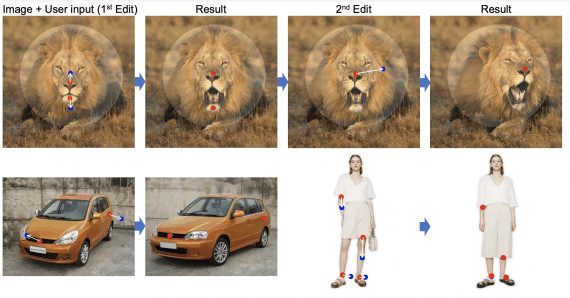

DragGAN: Open Source Model for Manipulating GAN-Generated Images

6 July 2023

DragGAN: Open Source Model for Manipulating GAN-Generated Images

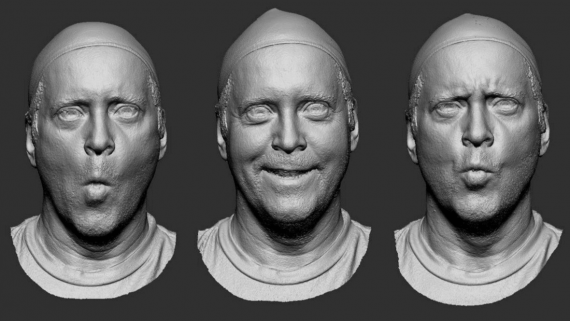

Researchers from the Max Planck Institute, MIT, and Google have introduced DragGAN, an innovative approach that allows for seamless manipulation of images generated using Generative Adversarial Networks (GANs). By leveraging…