Unique3D Model Generates 3D Mesh from a Single Image in 30 Seconds

27 June 2024

Unique3D Model Generates 3D Mesh from a Single Image in 30 Seconds

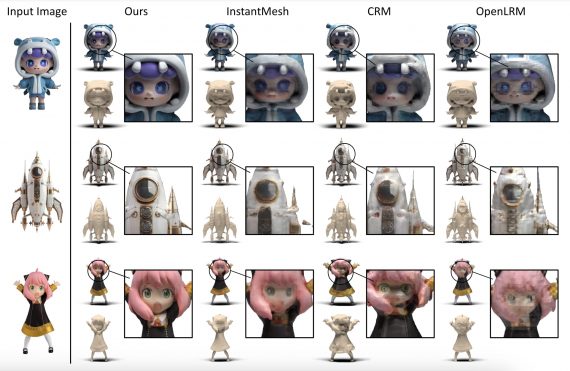

Unique3D is a state-of-the-art model for 3D mesh generation from single images, noted for its efficiency and high fidelity. Unique3D is code and weights are available open source. This approach…