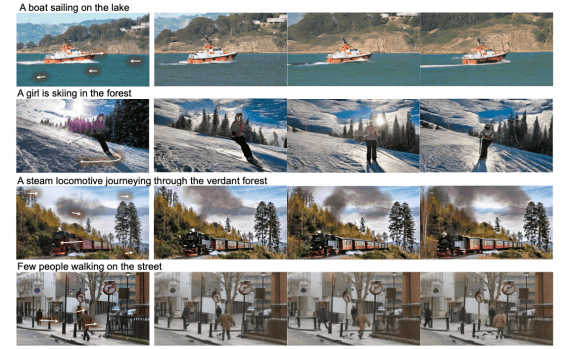

Microsoft DragNUWA: Video Generation via Object Trajectories

15 January 2024

Microsoft DragNUWA: Video Generation via Object Trajectories

Microsoft has released the DragNUWA weights – a cross-domain video generation model that offers more precise control over the resulting output compared to similar models. Control is achieved by simultaneously…