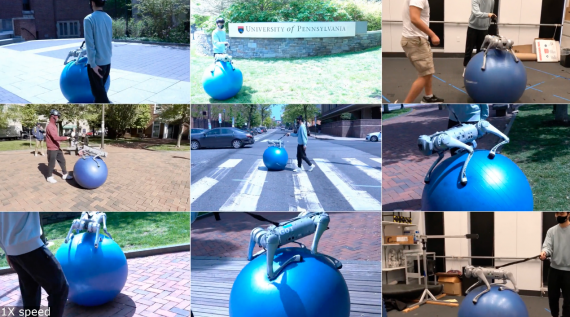

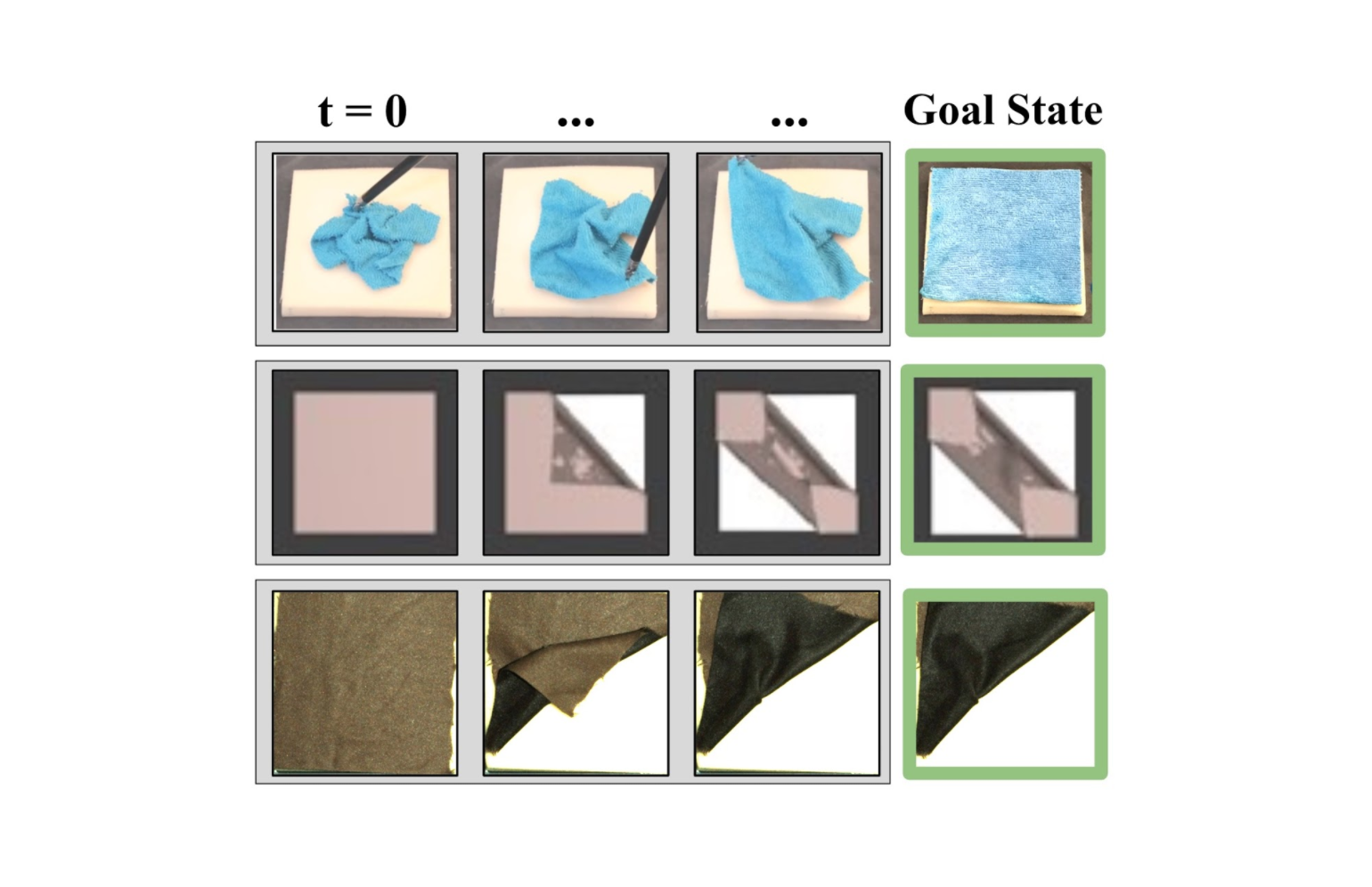

Researchers from the University of California and the Honda Research Institute trained a robot to fold fabric. The algorithm is based on a framework for teaching visual dynamics of objects based on RGB images – Visual Foresight. Such robots can be useful in the textile industry and surgery.

Textile automation has applications in robotics, textiles, nursing, and surgery. Existing methods for tissue manipulation are being developed for specific tasks. This makes it difficult to scale them to similar tasks from other domains.

More about the model

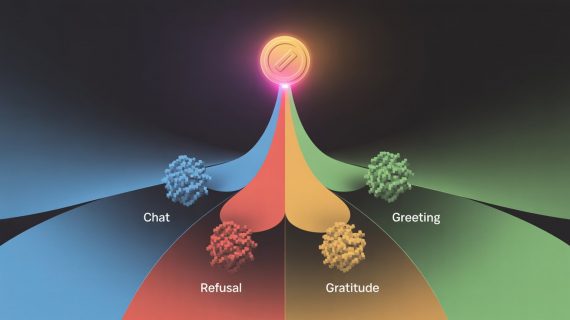

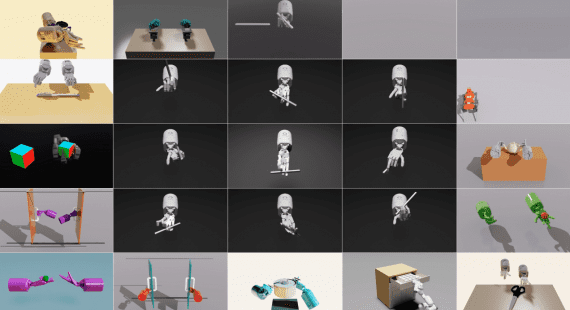

To learn the dynamics of the fabric, the researchers used a modified Visual Foresight framework. The framework can be reused in a tissue manipulation task with a single policy for the RL agent, negotiated for the end goal. In this case, the end goal is the target tissue position. The researchers extended their VisuoSpatial Foresight (VSF) framework, which learns visual dynamics from randomly spaced RGB images from the same domain. Simultaneously with the dynamics, the VSF learns depth maps. The learning process takes place in a simulation.

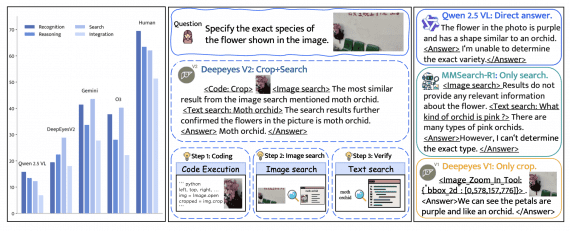

Algorithm testing

In early work, VSFs were tested on multi-step tasks of smoothing and folding fabric. The system was compared with 5 basic approaches in simulation and with the da Vinci Research Kit (dVRK) surgical robot. In the current work at VSF, 4 aspects were changed: data generation, model selection for visual dynamics, error functionality and optimization algorithm. Experiments have shown that training models of visual dynamics on longer actions improves the efficiency of models on the task of folding fabric by 76%. VSF solves the problem of folding fabric with 90% accuracy.