A large number of daily activities in our lives require identity verification. Identity verification provides a security mechanism starting from access control to systems all the way to at border crossing and bank transactions. However, in many of the activities that require identity verification, the process is done manually, and it is often slow and requires human operators.

An automated system for identity verification will significantly speed up the process and provide a seamless security check in all those activities where we need to verify our identity. One of the simplest ways to do this is to design a system that will match ID photos with selfie pictures.

Previous works

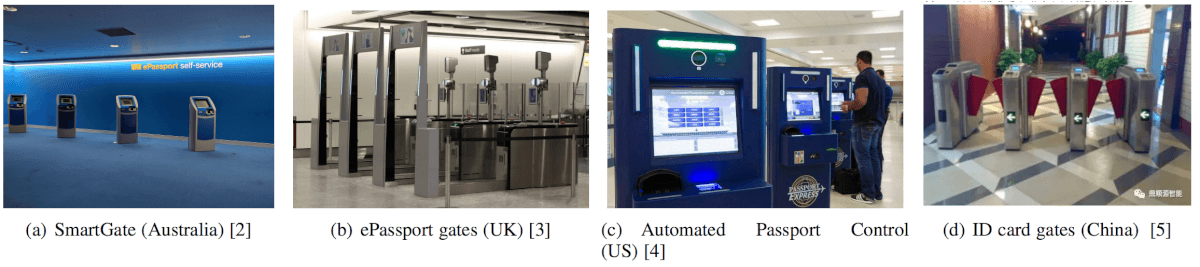

There have been both successful and unsuccessful attempts in the past to employ an automated system for identity verification. A successful example is Australia’s SmartGate. It is an automated self-service border control system operated by the Australian Border Force and located at immigration checkpoints in arrival halls in eight Australian international airports. It uses a camera to capture a verification picture and tries to match it to a person’s ID. Also, China has introduced such systems at train stations and airports.

While there have also been attempts to match ID Documents and selfies using traditional computer vision techniques, the better-performing methods rely on deep learning. Zhu et al. proposed the first deep learning approach for a document to selfie matching using Convolutional Neural Networks.

State-of-the-art idea

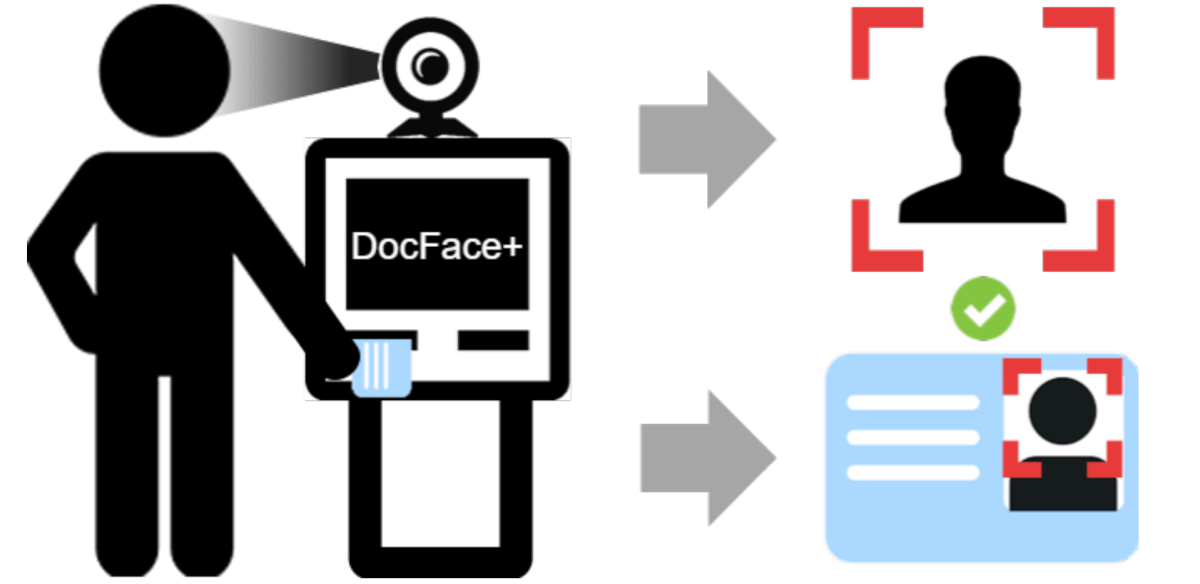

In their new paper, researchers from Michigan State University proposed an improved version of their DocFace – a deep learning approach for document-selfie matching.

They show that gradient-based optimization methods converge slowly when many classes have very few samples – like in the case of existing ID-selfie datasets. To overcome this shortcoming, they propose a method, called Dynamic Weight Imprinting (DWI). Additionally, they introduce a new recognition system for learning unified representations from ID-selfie pairs and an open-source face matcher called DocFace+, for ID-selfie matching.

Method

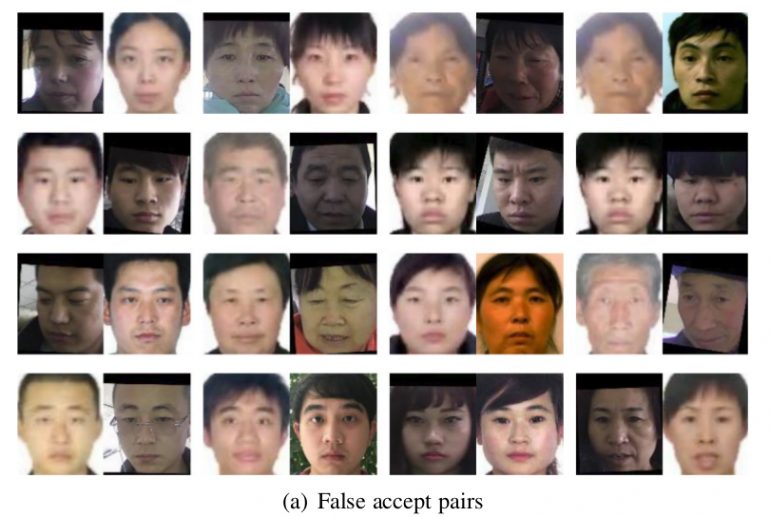

There are a large number of problems and constraints in building an automated system for ID-selfie matching. Speaking about ID-selfie matching, numerous challenges are different from general face recognition.

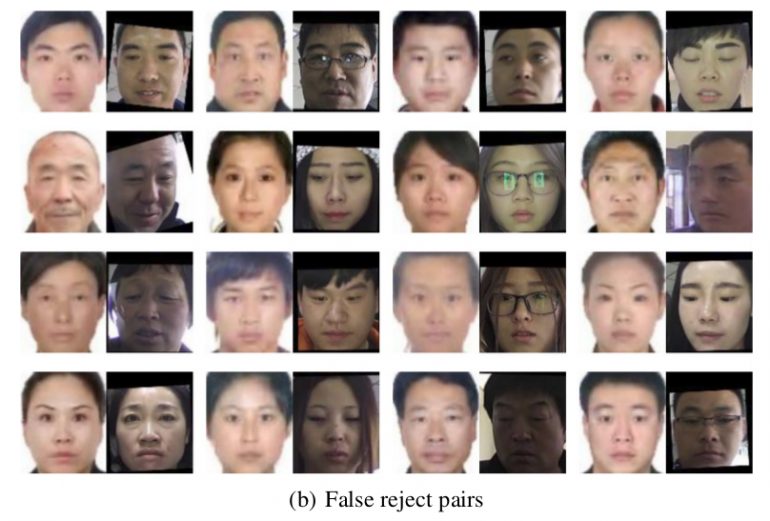

The two main challenges are low quality of document (as well as selfie) photos due to compression and the large time gap between the document issue time and the verification moment.

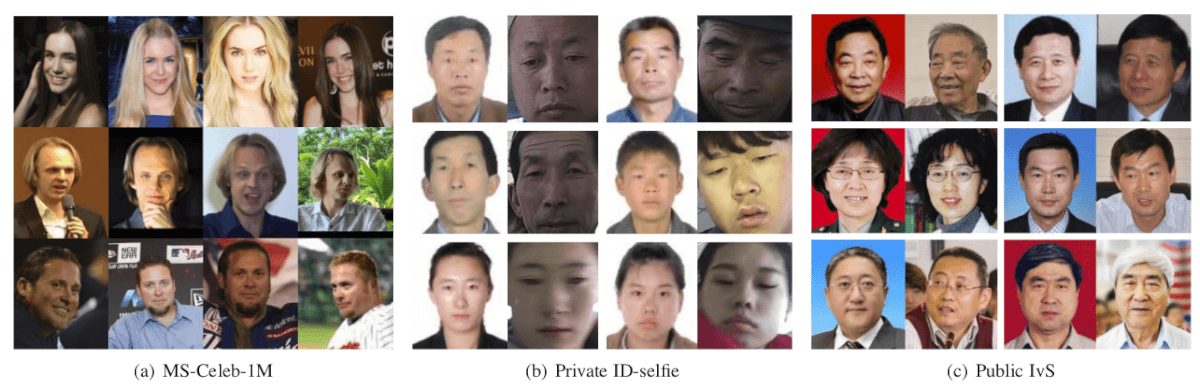

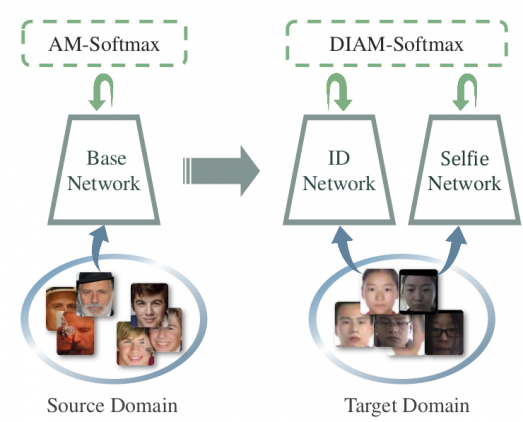

The whole method is based on transfer learning. A base neural network model is trained on a large-scale face dataset (MS-Celeb 1M), and then features are transferred to the target domain of ID-selfie pairs.

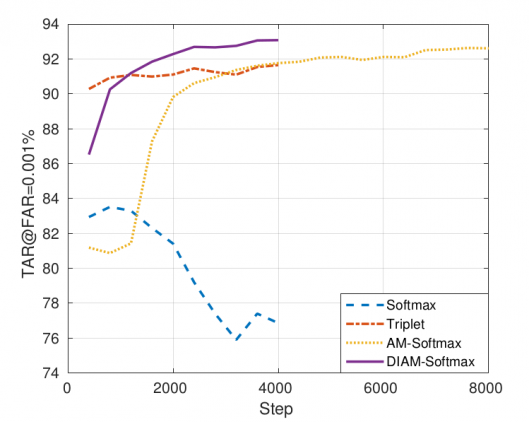

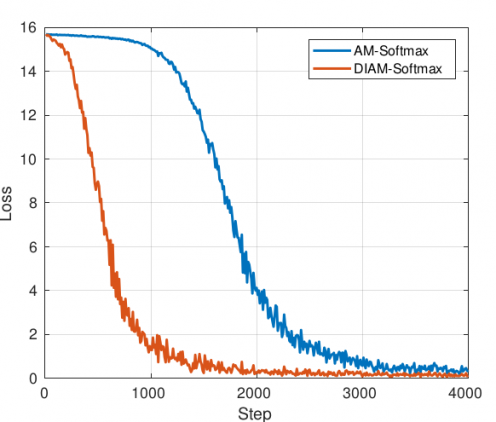

Arguing that the convergence is very slow and very often the training can get stuck in local minima when dealing with many classes having very few samples, the researchers propose to use Additive Margin Softmax (AM-Softmax) loss function alongside with a novel optimization method that they call Dynamic Weight Imprinting (DWI).

Dynamic Weight Imprinting

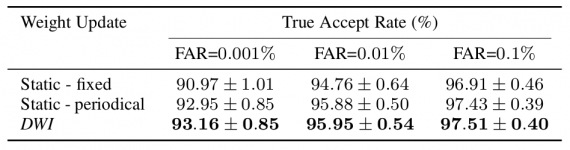

Since Stochastic Gradient Descent updates the network with mini-batches, in a two-shot case (like the one of ID-selfie matching), each weight vector will receive signals only twice per epoch. These sparse attraction signals make little difference to the classifier weights. To overcome this problem, they propose a new optimization method where the idea is to update the weights based on sample features and therefore avoid underfitting of the classifier weights and accelerate the convergence.

Compared with stochastic gradient descend and other gradient-based optimization methods, the proposed DWI only updates the weights based on genuine samples. It only updates the weights of classes that are present in the mini-batch, and it works well with extensive datasets where the weight matrix of all classes is too large to be loaded, and only a subset of weights can be sampled for training.

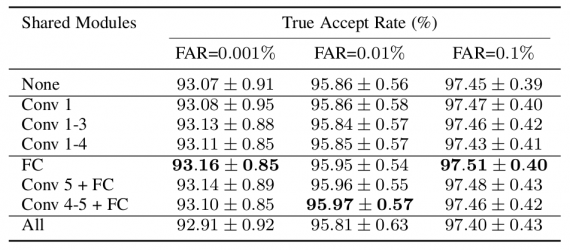

The researchers trained the popular Face-ResNet architecture using stochastic gradient descent and AM-Softmax loss. Then they fine-tune the model on the ID-selfie dataset by binding the proposed Dynamic Weight Imprinting optimization method with the Additive Margin Softmax. Finally, a pair of sibling networks is trained for learning domain-specific features of IDs and selfies sharing high-level parameters.

Results

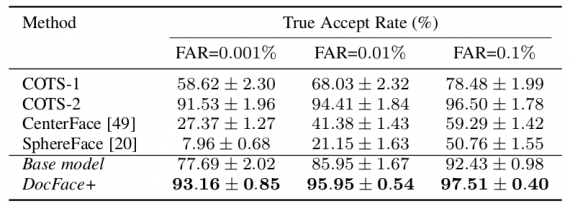

The proposed ID-selfie matching method achieves excellent result obtaining true acceptance rate TAR to 97.51 ± 0.40%. The authors report that their approach using the MS-Celeb-1M dataset and the AM-Softmax loss function achieves 99.67% accuracy on the standard verification protocol of LFW and a Verification Rate (VR) of 99.60% at False Accept Rate (FAR) of 0.1% on the BLUFR protocol.

Comparison with other state-of-the-art

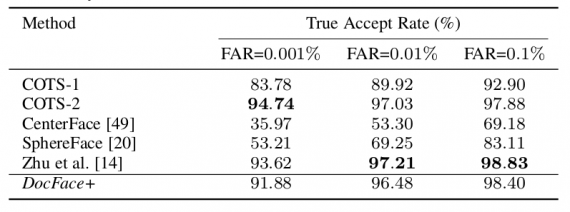

The approach was compared with other state-of-the-art general face matches since there are no existing public ID-selfie matching methods. The comparison with these methods is given concerning TAR – true accept rate and FAR – false accept rate and shown in the tables below.

Conclusion

The proposed DocFace+ method for ID-selfie matching shows the potential of transfer learning, especially in tasks where not enough data is available. The proposed method is achieving high accuracy in selfie to ID matching and has potential to be employed in identity verification systems. Additionally, the proposed novel optimization method – Dynamic Weight Imprinting shows improved convergence and better generalization performance and represents a significant contribution to the field of machine learning.