Adobe’s Firefly 2 Combines Image Generation and Style Transfer Models

11 October 2023

Adobe’s Firefly 2 Combines Image Generation and Style Transfer Models

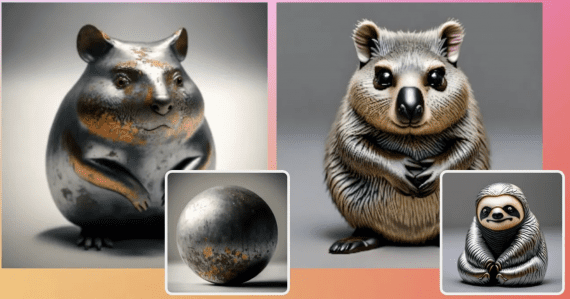

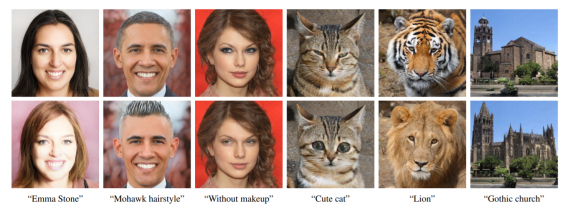

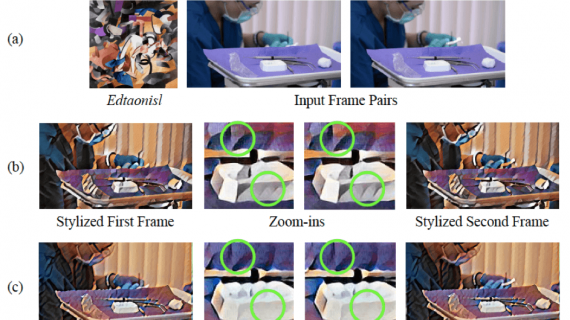

The Adobe Firefly 2 image generation model, currently in beta, is now available via the Firefly web application. The standout feature of Firefly 2 is the Generative Match, designed for…