Human rights violations have been unfolding during the entire human history, while nowadays they increasingly appear in many different forms around the world. Human rights violations refer to the actions executed by state or non-state actors that breach any part of those rights that protect individuals and groups from behaviors that interfere with fundamental freedoms and human dignity.

Photos and videos have become an essential source of information for human rights investigations, including Commissions of Inquiry and Fact-finding Missions. Investigators often receive digital images directly from witnesses, providing high-quality corroboration of their testimonies. In most instances, investigators receive images from third parties (e.g. journalists or NGOs), but their provenance and authenticity are unknown.

A third source of digital images is social media, e.g. uploaded to Facebook, again with uncertainty regarding authenticity or source. That sheer volume of images means that to manually sift through the photos to verify if any abuse is taking place and then act on it, would be tedious and time-consuming work for humans. For this reason, a software tool aimed at identifying potential abuses of human rights, capable of going through images quickly to narrow down the field would greatly assist human rights investigators. Major contributions to this work are as follows:

- A new dataset of human rights abuses, containing approximately 3k images for 8 violation categories.

- Assess the representation capability of deep object-centric CNNs and scene-centric CNNs for recognizing human rights abuses.

- Attempt to enhance human rights violations recognition by combining object-centric and scene-centric CNN features over different fusion mechanisms

- Evaluate the effects of different feature fusion mechanisms for human rights violations recognition.

Human Rights Violations Database

Many organizations concerned with human rights advocacy use digital images as a tool for improving the exposure of human rights and international humanitarian law violations that may otherwise be impossible. To advance the automated recognition of human rights violations a well-sampled image database is required.

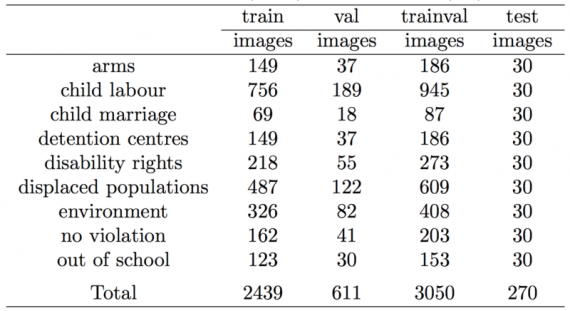

Human Rights Archive (HRA) database, a repository of approximately 3k photographs of various human rights violations captured in real-world situations and surroundings, labeled with eight semantic categories, comprising the types of human rights abuses encountered in the world. The dataset contains eight violation categories and a supplementary ‘no violation’ class.

Human rights violations recognition is closely related to, but radically different from, object and scene recognition. For this reason, following a conventional image collection procedure is not appropriate for collecting images with respect to human rights violations. The first issue encountered is that the query terms for describing different categories of human rights violations must be provided by experts in the field of human rights.

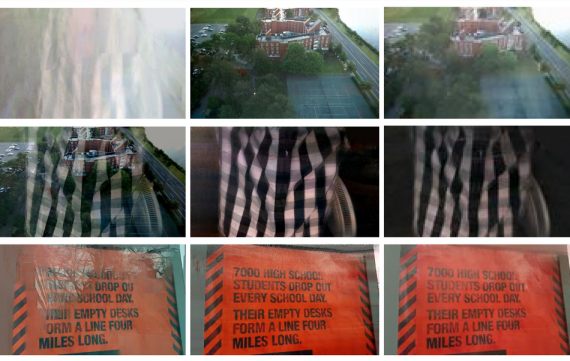

Non-governmental organizations (NGOs) and their public repositories are considered to create a dataset.

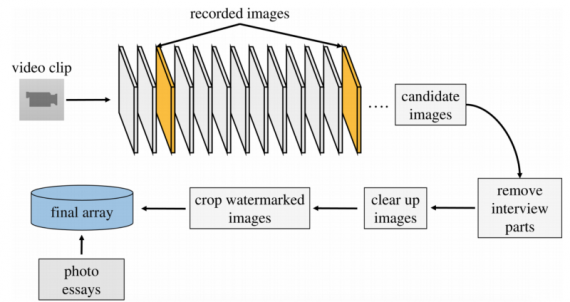

The first NGO considered is Human Rights Watch which offers an online media platform capable of exposing human rights and international humanitarian law violations in the form of various media types such as videos, photo essays, satellite imagery and audio clips. Their online repository contains nine main topics in the context of human rights violations (arms, business, children’s rights, disabilities, health and human rights, international justice, LGBT, refugee rights and women rights) and 49 subcategories. One considerable drawback in the course of that process is the presence of a watermark in most of the video files available from that platform. As a result, all the recorded images that originally contained the watermark had to be cropped in a suitable way.

Only colour images of 600×900 pixels or larger were retrieved after the cropping stage. In addition to those images, all photo essays available for each topic and its subcategories are added, resulting in 342 more images to the final array. The entire pipeline used for collecting and filtering out the pictures from Human Rights Watch is depicted in Figure 1.

The second NGO investigated is the United Nations which presents an online collection of images in the context of human rights. Their website is equipped with a search mechanism capable of returning relevant images for simple and complex query terms.

The final dataset contains a set of 8 human rights violations categories and 2847 images. 367 ready-made images are downloaded from the two online repositories representing 12.88% of the entire dataset, while the remainder (2480) images are recorded from videos coming out of Human Rights Watch media platform. The final dataset consists of eight categories which are as follows:

- Arms

- Child Labour

- Child Marriage

- Detention Centres

- Disability Rights

- Displaced populations

- Environment

- Out of School

How It Works

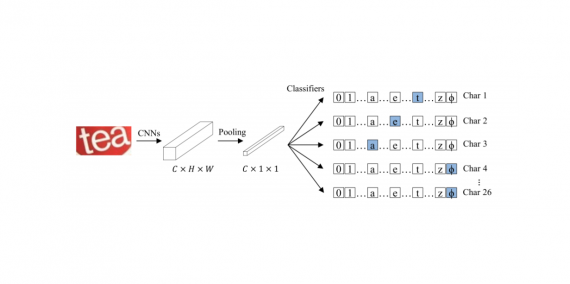

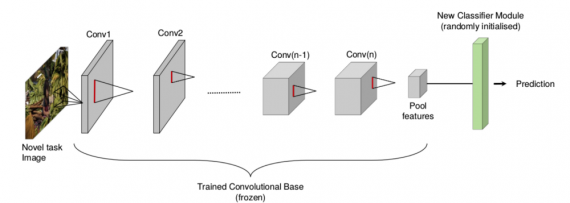

Given the impressive classification performance of the deep convolutional neural networks, three modern object-centric CNN architectures, ResNet50, VGG 16 and VGG 19 convolutional-layer CNNs are used and then fine-tune them on HRA to create baseline CNN models.

Transfer Learning technique is used to injects knowledge from other tasks by deploying weights and parameters from a pre-trained network to the new one and has become a commonly used method to learn task-specific features.

Considering the size of the dataset, the chosen method to apply a deep CNN is to reduce the number of free parameters. To achieve this, the first filter stages can be trained in advance on different tasks of object or scene recognition and held fixed during training on human rights violations recognition. By freezing (preventing the weights from getting updated during training) the earlier layers, overfitting can be avoided.

Feature extraction modules has been initialized using pre-trained models from two different large-scale datasets, ImageNet and Places. ImageNet is an object-centric dataset which contains images of generic objects including person and therefore is a good option for understanding the contents of the image region comprising the target person. On the contrary, Places is a scene-centric dataset specifically created for high-level visual understanding tasks such as recognizing scene categories.

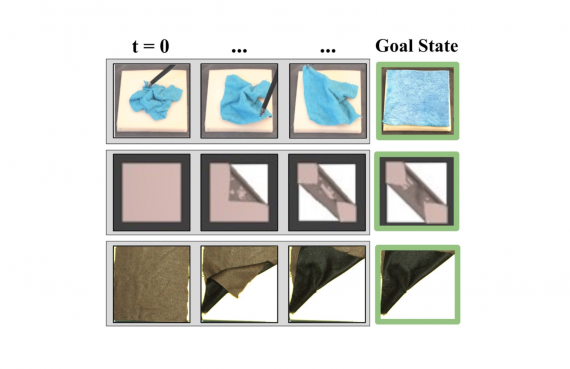

Hence, pretraining the image feature extraction model using this dataset ensures providing global (high level) contextual support. For the target task (human rights violation recognition), the network will output scores for the eight target categories of the HRA dataset or no violation if none of the categories is present in the image.

Results

The classification results for top-1 accuracy and coverage are listed below. A more natural performance metric to use in this situation is coverage, the fraction of examples for which the system can produce a response. For all the experiments in this paper, we employ a threshold of 0.85 over the prediction confidence in order to report the coverage performance metric.

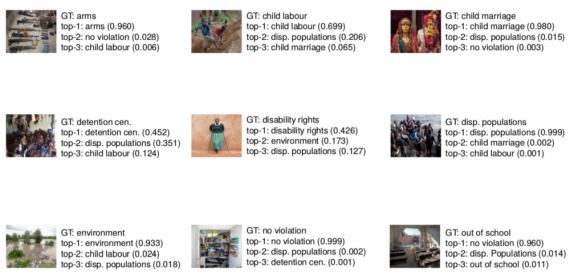

Figure 3 shows the responses to examples predicted by the best performing HRA-CNN, VGG19. Broadly, we can identify one type of misclassification given the current label attribution of HRA: images depicting the evidence which are responsible for a particular situation and not the actual action, such as schools being targeted by armed attacks. Future development of the HRA database will explore to assign multi-ground truth labels or free-form sentences to images to better capture the richness of visual descriptions of human rights violations.

This technique addresses the problem of recognizing abuses of human rights given a single image. HRA dataset is created with images used in non-controlled environments containing activities which reveal a human right being violated without any other prior knowledge. Using this dataset and a two-phase deep transfer learning scheme, a state of the art deep learning algorithms is present for the problem of visual human rights violations recognition. A technology capable of identifying potential human rights abuses in the same way as humans do has a lot of potential applications in human-assistive technologies and would significantly support human rights investigators.