StyleCLIP is a combination of CLIP and StyleGAN models designed to manipulate image style with text prompts. The open-source code is available, including Google Colab notebooks.

Why is it needed

StyleGAN models are capable of generating highly realistic images, such as portraits of people who never existed. Despite their widespread use, they work like a black box. This means that sample images are needed to control the output. Otherwise, painstaking exploration of the multidimensional property space hidden under the hood of the model is required.

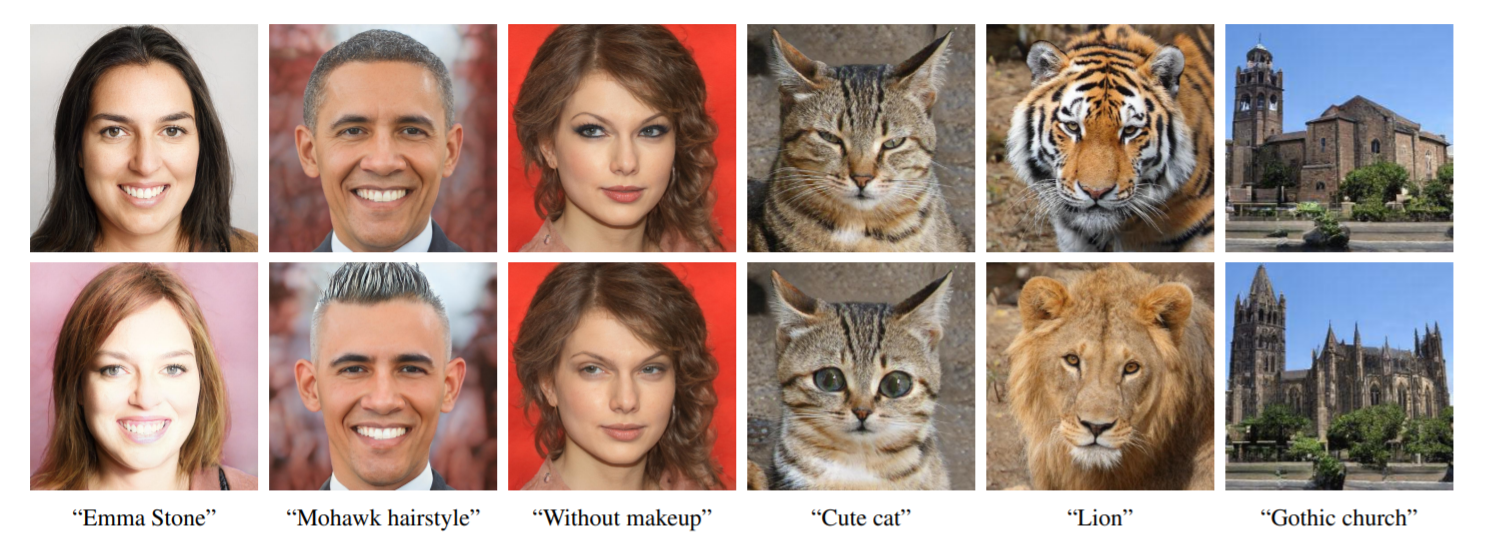

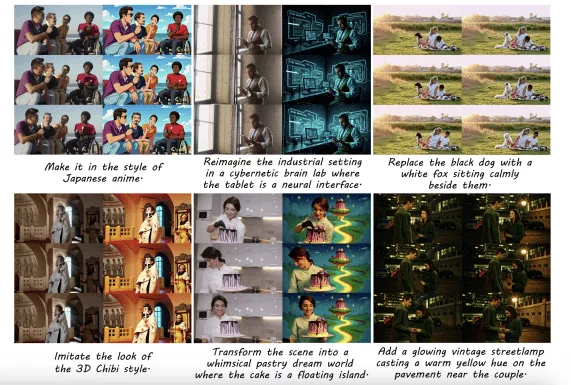

Attempts to create a text-based interface for managing StyleGAN are not new. However, existing interfaces only allow pre-defined image properties to be changed. Authors use CLIP to style images with arbitrary, intuitive textual descriptions.

How it works

A key feature of CLIP is the ability to recognize image content among natural language signatures. The CLIP model used in the paper has been pretrained on 400 million image-text pairs. Since natural language is capable of expressing a wide range of visual concepts, combining CLIP with the generation power of StyleGAN opens up the scope for manipulating image styles.

Three methods of combining CLIP with StyleGAN were investigated:

- Text-guided latent optimization, where a CLIP model is used as a loss network. This is the most versatile approach, but it requires a few minutes of optimization to apply a manipulation to an image.

- A latent residual mapper, trained for a specific text prompt. Given a starting point in latent space (the input image to be manipulated), the mapper yields a local step in latent space.

- A method for mapping a text prompt into an input-agnostic (global) direction in StyleGAN’s style space, providing control over the manipulation strength as well as the degree of disentanglement.

Thanks, I was looking for same