With the rapid progress and state-of-the-art performance in a wide range of tasks, deep learning based methods are in use in a large number of security-sensitive and critical applications. However, despite the remarkable, often beyond human-level performance, deep learning methods are vulnerable to well-designed input samples. This kind of input samples is named adversarial examples. In a game of “cat and mouse”, researchers have been competing in designing robust adversarial attacks on one hand and designing robust defense mechanisms on the other.

The problem of adversarial attacks is well emphasized in Computer Vision tasks such as object recognition, classification. In the field of image processing with deep learning, small perturbations in the input space can result in a significant change in the output. Such disorders are almost unnoticeable for humans and do not change the semantics of the image content itself, however, can trick deep learning methods.

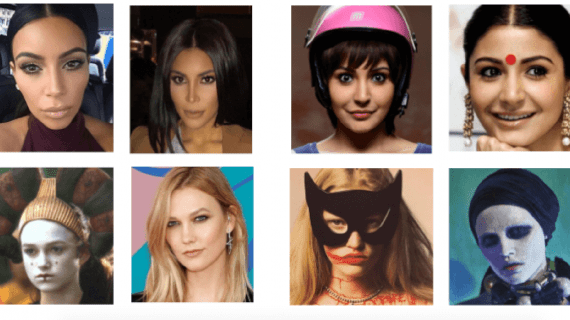

Adversarial attacks are a big concern in security-critical applications such as identity verification, access control etc. One particular target of adversarial attacks is face recognition.

Previous works

The excellent performance of deep learning methods for face recognition has contributed for them to be accepted and employed in a wide variety of systems.

In the past, adversarial attacks have targeted face recognition systems. Mainly, these attacks can be divided into two more prominent groups: intensity-based and geometry-based adversarial attacks. Many of them proved to be very successful in fooling a face recognition system. However, a number of defense mechanisms have been proposed to deal with different kinds of attacks.

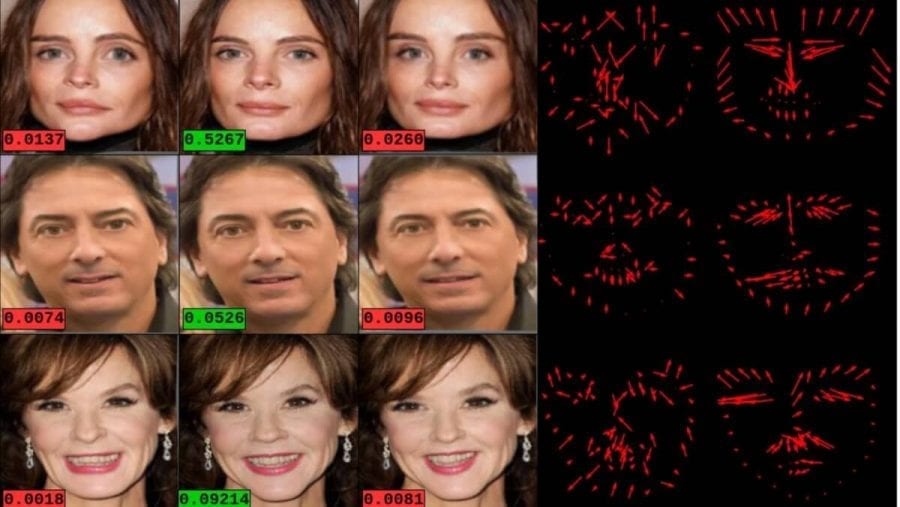

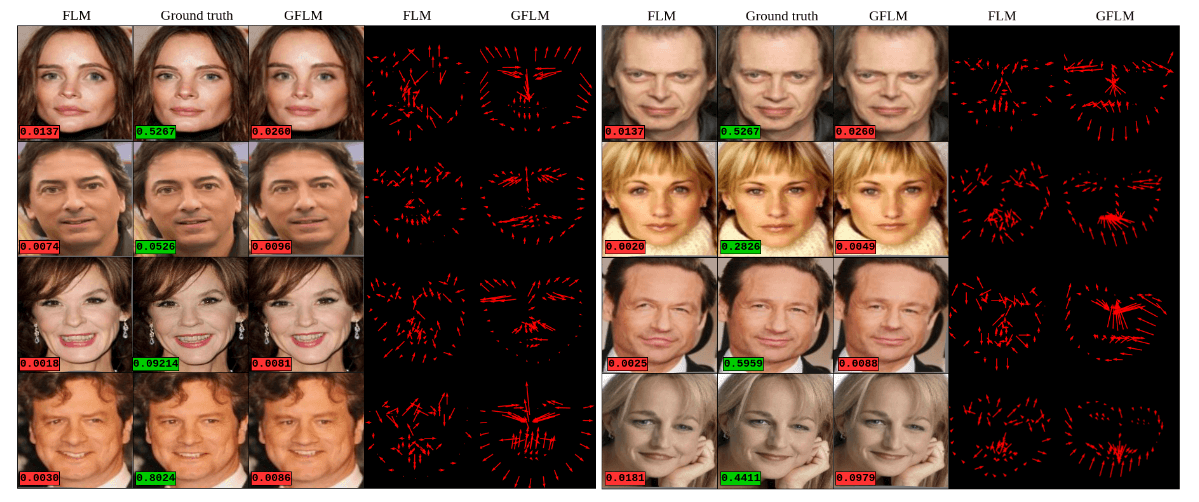

attack (Column 3).

To exploit the vulnerability of face recognition systems and surpass defense mechanisms, more and more sophisticated adversarial attacks have been developed. Some of them are changing pixel intensities while others are trying to transform benign images to perform the attack spatially.

State-of-the-art idea

Researchers from West Virginia University have proposed a new fast method for generating adversarial face images. The purpose of their approach is defining a face transformation model based on facial landmark locations.

Method

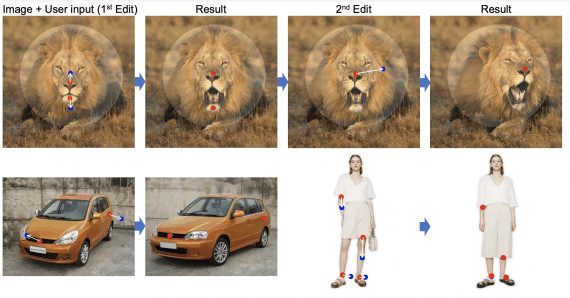

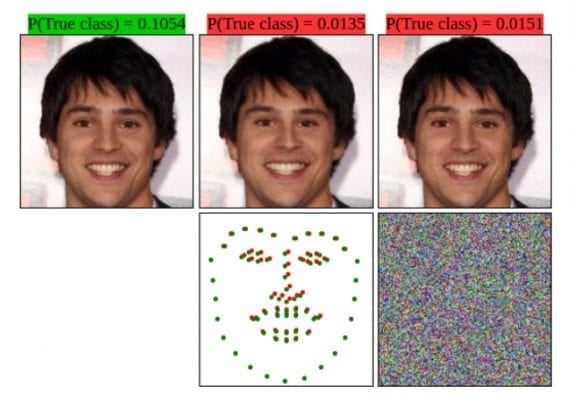

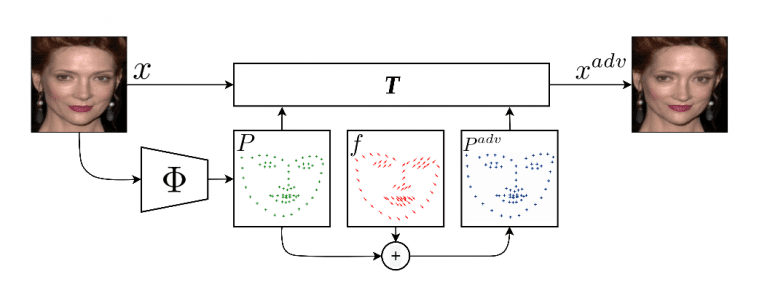

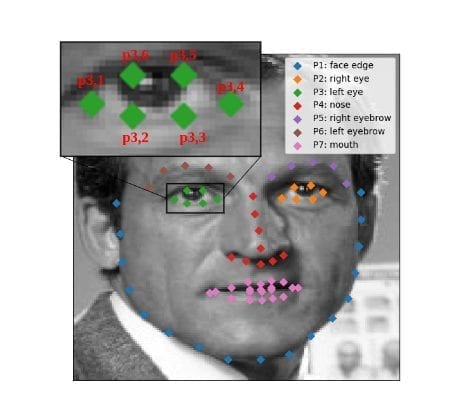

The problem of manipulating an image and transforming it to an adversarial sample has been addressed by landmark manipulation. The technique is based on optimizing for a displacement field, which is used to process the input image spatially. It is a geometry-based attack, able to generate adversarial sample by only modifying a number of landmarks.

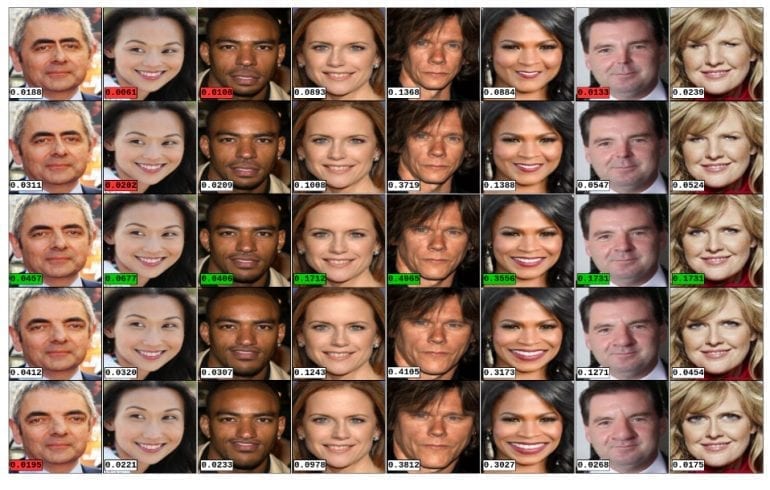

Taking into account the fact that facial landmark locations provide highly discriminative information for face recognition tasks, the researchers exploit the gradients of the prediction to the position of landmarks to update the displacement field. A scheme of the proposed method for generating adversarial face images is shown in the picture below.

To overcome the problem of multiple possible updates of the displacement field due to the different possible direction of the gradients, they propose grouping the landmarks semantically. This allows manipulating the group properties instead of perturbing each landmark for obtaining natural images.

Results

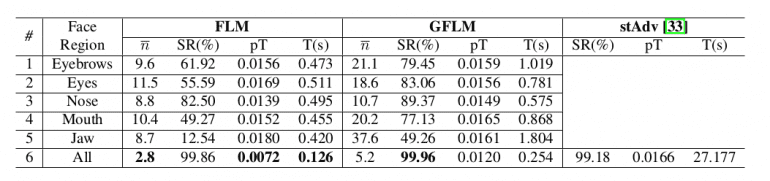

The new adversarial face generator was evaluated by measuring and comparing the performance of the attacks under several defense methods. To further explore the problem of generating adversarial samples of face images the researchers assess how spatially manipulating the face regions affects the performance of a face recognition system.

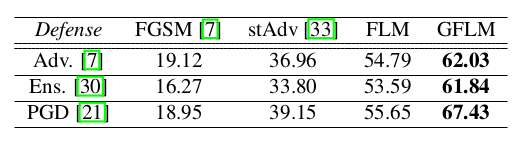

First, the performance was evaluated on a white-box attack scenario on the CASIA-WebFace dataset. Six experiments were done to investigate the importance of each region of the face in the proposed attack methods. They evaluate the performance of the attacks on each of the five main areas of the face including 1) eyebrows, 2) eyes, 3) nose, 4) mouth, 5) jaw and 6) all regions. The results are given in the table.

Comparison with other state-of-the-art

A comparison with several existing methods for generating adversarial faces has been made within this study. They compare the methods in terms of success rate and also speed.

Conclusion

This approach shows that landmark manipulation can be a reliable way of changing the prediction of face recognition classifiers. The novel method can generate adversarial faces approximately 200 times faster than other geometry-based approaches. This method creates natural samples and can fool state-of-the-art defense mechanisms.