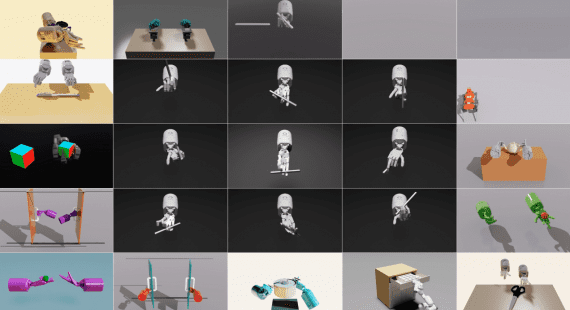

NVIDIA Isaac 5.0: Enhanced Sensor Physics and Expanded Synthetic Data Generation

19 May 2025

NVIDIA Isaac 5.0: Enhanced Sensor Physics and Expanded Synthetic Data Generation

NVIDIA continues to push the boundaries of AI-driven robotics with significant updates to its Isaac ecosystem, announced at COMPUTEX 2025. These innovations address key challenges in robotics development by enhancing…