A group of researchers from Aalto University, Adobe Research, and NVIDIA, has published a paper in which they describe how to create interpretable GAN controls for image synthesis.

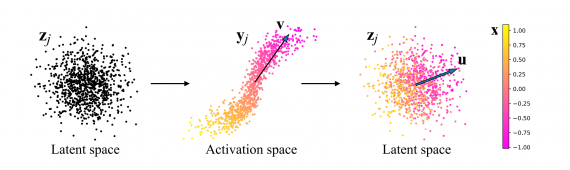

As they mention the paper, Generative Adversarial Networks (GANs) are powerful models when it comes to realistic image generation, however, they lack control over the generated content. They propose a simple technique to overcome this problem which relies on identifying significant latent directions using Principal Component Analysis (PCA) in the activation space.

The proposed technique is based on an important observation that researchers made about the useful control directions of generated content. They found out that principal components of activation tensors in the early layers of GANs represent important factors of variation, and therefore can be exploited to find control mechanisms for image synthesis.

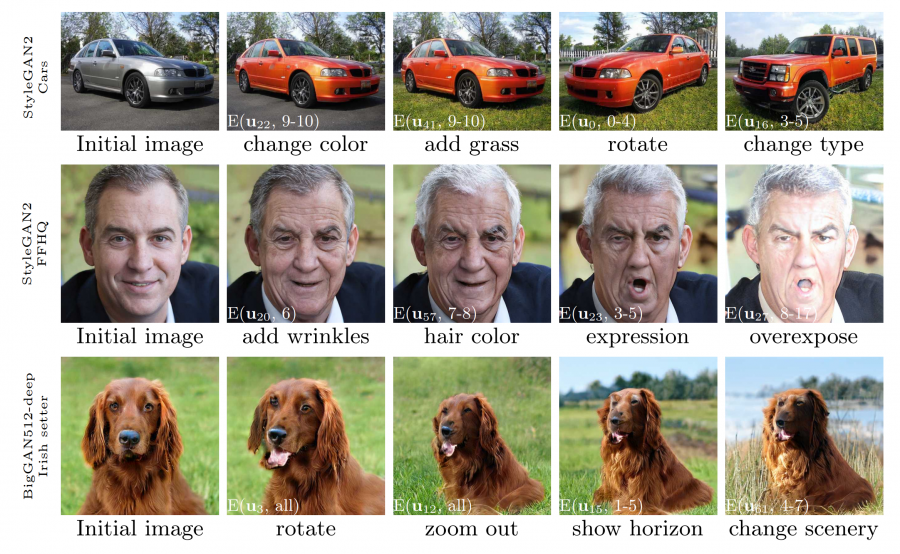

An analysis of what the PCA components control was performed and described in the paper. In turned out that the first few PCA components control large-scale variations such as geometric configuration, viewpoint, head rotation, gender expression, etc. Researchers used several datasets to analyze GANs and test their technique and they also identified interpretable controls for aging, lighting and time of the day.

The implementation of GANSpace, as the technique was named, is open-sourced and available on Github. More details about the method and the performed analysis can be read in the paper published on arxiv.