The fact that our brain in a noisy environment can effectively focus on a particular speaker, “turning off” background sounds — is no secret. This phenomenon even received the popular name “cocktail party effect”. But, despite the good study of the phenomenon, the automatic separation of speech of a particular speaker is still a difficult task for machines.

So Looking to Listen project with a combined audio-visual model was created. The project’s technology makes it possible to select one person’s speech from the soundtrack (including background noise and other people’s voices) and to mute all other sounds.

The method works on totally ordinary videos with single audio stream. All that is required from users is to select face of the person they want to hear on the video.

The possible application of this technology is extremely wide — from speech recognition to hearing aids, which today work poorly if several people speak simultaneously.

The new technology uses a combination of audio and video to separate speech. Thanks to the human lips movement and its correlations with the sounds spoken, it is possible to determine which part of the audio stream is associated with a particular person. This significantly improves the quality of speech allocation (in comparison with systems that process only audio), especially in situations where there are several speakers at once.

More importantly, the technology makes it possible to recognise which of the people on the video says what, by linking the already separated speech with specific speakers.

How does it work?

As training examples for a neural network, a database of 100,000 videos of lectures and conversations on YouTube was used. Of these, fragments with “pure speech” (without background music, audience sounds or other people’s speeches) and just one speaker in the frame with a total duration of about 2000 hours were singled out.

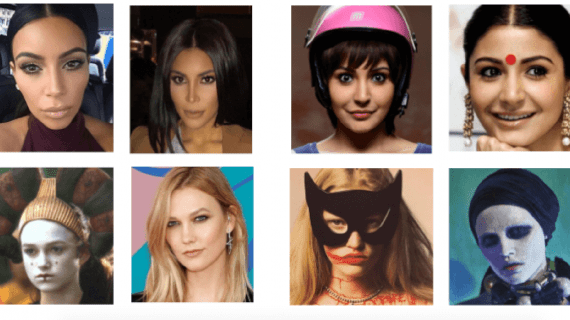

Then from these “pure” data “synthetic cocktail parties” were created — videos in which faces of the speakers were mixed, as well as their pre-separated speech and background noises, which were taken on AudioSet.

As a result, it was possible to train the convolutional neural network to allocate from the “cocktail party” a separate audio stream for each person speaking on the video.

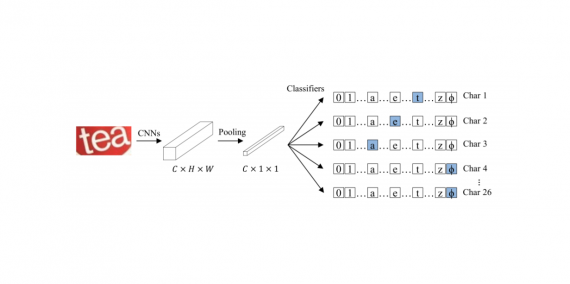

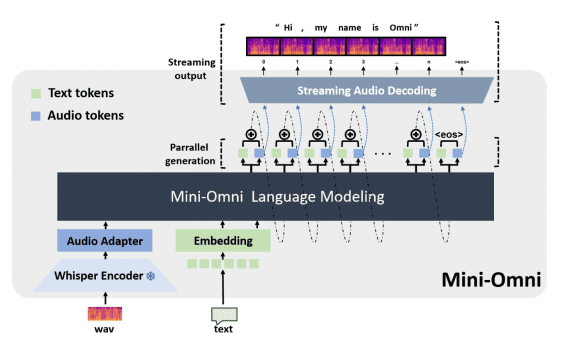

The architecture of the neural network can be found on the diagram below:

First, the recognised faces are extracted from the video stream, after which the convolutional neural network looks at the features of each face. From the audio stream, in turn, the system first obtains a spectrogram using STFT, and then it processes it via a similar neural network. The combined audiovisual representation is obtained by fusing the processed audio and video signals and is further processed using a bi-directional LSTM and three layers of a deep convolutional neural network.

The network creates a complex spectrogram mask for each speaker, which is multiplied with “noisy” original data and again converted into a waveform to obtain an isolated speech signal for each speaker.

More information about the technology and projects results can be found in the project documentation and on its Github page.

The Results

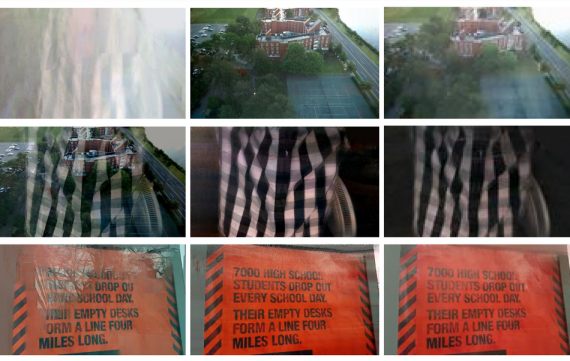

Below are the results of applying this technology to several videos. All sounds, except for the speech of the selected person, can be either turned off at all or muffled to the required level.

This technology can be useful for speech recognition and automatic captions. Existing systems do not cope with the situation when the speech of several people overlap. Separation of sound “by source” allows you to get more accurate and easier to read captions.